CacheCow 2.0 Series:

HTTP Caching on the server

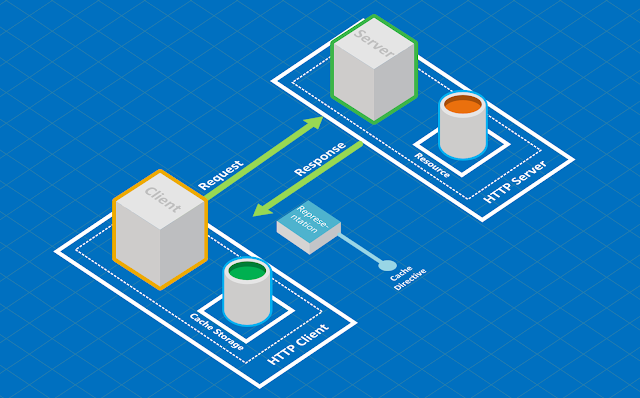

In HTTP Caching, the server has two main responsibilities:

- Supplying cache directives - including Cache-Control (and ETag, Last-Modified and Vary) headers

- Performing conditional validating requests

These two have been explained in full in

Part 1 of this series (It might be useful to have a quick review of that post to re-cap and refresh your memories).

In CacheCow 1.x, it was assumed that resources can be updated only through the API, i.e. all modifications had to go through the API - if modifications were done outside the agent modifying was responsible for invalidating API cache: obviously this is not always possible or architecturally correct. As I explained in Part 1, this was a big assumption and even with such an assumption in place, the interaction between various resources made cache invalidation very difficult for the API layer: change to a single resource invalidates its collection resource and in case of hierarchical resources, change to a leaf resource invalidates higher up.

CacheCow 2.x solves these problems by making the data and data providers take part in cache validation through new a couple of new abstractions. The following two sections are essential to understand the full value you get from CacheCow.Server, apart from setting

Cache-Control header which is frankly not rocket science. But if you get bored and want to see some code, feel free to skip to the Getting Started section and come back to these later on.

ASP.NET Core supports HTTP Caching, why not use that instead?

ASP.NET Core has provided several primitives for

HTTP Caching to:

- Generate cache directives (and respond to conditional requests)

- Caching middleware to cache representations on the server (an in-process HTTP proxy)

You are, of course, free to use these additional primitives. At the end of the day,

generating cache directives requires bunch of if/else statements and reading from some config (for the

max-age, etc). But bear in mind these points:

- ASP.NET Core caching has started where CacheCow 0.x was. It suffers essentially from some of the same issues in CacheCow 0.x/1.x where the API layer is unaware of cache invalidation when it happens outside the API layer or when there is relationship between different resources. In fact CacheCow 1.x was capable of understanding relationship between single and collection resources as long as you would adhere to simple naming convention and it also provided invalidation mechanism. AFAIK these do NOT exist in ASP.NET Core caching and this could be potentially harmful if you care about consistency of your resources. (Consistency was explain in Part 1)

- CacheCow.Server now provides the constructs to make the data source (and not the API) the authority with regard to ETag and conditional validating calls - as it is normally the case. This also solves the problem of cache invalidation when data is modified but not through the API - perhaps arrival of new batch data or when the underlying data store is exposed via different mechanisms which is common in the industry.

- To my knowledge, caching middleware does not do validation. Again if you care about consistency of your resources then this is probably not for you.

The new shiny CacheCow.Sever 2.x

As explained, CacheCow.Server moves controlling of cache validation to where it belongs: your data and data providers. And in order to make that seamless and not pollute your API, these have been abstracted away to several constructs. These are explained in more detail on

github so check that out if you need the next level details.

ITimedETagExtractor

CacheCow blends versioning identity of a resource into TimedETag (an Either of

Last-Modified or

ETag).

ITimedETagExtractor extracts TimedETag from a resource. Extraction by default uses

SHA1 hash of the json-serialised response payload (which has understandable overhead) but it would be very easy for you to provide an alternative mechanism for extracting the TimedETag. Many database tables have a timestamp column which you can use as

Last-Modified. But since HTTP data does not have sub-second accuracy and this might mean missing updates done in the same second, it is best if you turn that into an opaque hash-like value as

ETag - e.g.

this:

public static string TurnDatetimeOffsetToETag(DateTimeOffset dateTimeOffset)

{

var dateBytes = BitConverter.GetBytes(dateTimeOffset.UtcDateTime.Ticks);

var offsetBytes = BitConverter.GetBytes((Int16)dateTimeOffset.Offset.TotalHours);

return Convert.ToBase64String(dateBytes.Concat(offsetBytes).ToArray());

}

For the

collection resources (e.g. orders vs. order) where there are a number of LastUpdated fields, all you need is

Max(LastUpdated) and

total count. You can combine these two values into a byte array buffer and serve the base64 representation directly or if you want it completely opaque, use its hash.

You can implement

ICacheResource interface on your view models (payloads you return from your actions) to extract the value and return TimedETag - it is a very simple interface with a single method.

ITimedETagQueryProvider

Generating TimedETag from the resources is all well and good but it means that we have to bring the resource all the way to the API layer to generate the TimedETag. This is completely acceptable if we then are serving the resource. But what if we are doing cache validation from a client asking to respond with the whole resource only if things have changed (and NotModified 304 status otherwise)? In that case, we might load the whole resource to find out it has not changed and waste computation effort - also the pressure on the backend systems stays regardless of whether resource was modified or not. Bear in mind, in this case there is still some saving on network and computing but surely we can do better than this.

The solution is to use ITimedETagQueryProvider to preemptively query your backend for the current status of the resource, i.e. TimedETag. Usually getting that piece of information about the resource is much cheaper than returning the whole resource. For a single resource, you just need the e.g. timestamp field and for the collection resource, Max(timestamp) and count - if you are using RDBMS, all of this can be conveniently achieved in a single query e.g. "SELECT COUNT(1), MAX(LastUpdated) FROM MyTable WHERE IsActive = 1".

Understanding trade-offs of various approaches

All of above said, you do not necessarily have to implement ITimedETagExtractor and ITimedETagQueryProvider. In fact you can use the CacheCow.Server out-of-the-box and it will fulfil all server-side caching duties. The point is if you would like optimal performance, you have got to do a bit more work. The table below explains your various options and the benefits you get.

|

| Table 1: CacheCow.Server - trade-offs and options |

No need for storage anymore

CacheCow.Server 1.x had a need for some storage to keep the current TimedETag of the resource. Now that the TimedETag is generated or queried, there is no more such need. All solutions to do with EntiyTagStore in the CacheCow.Server have been removed in the repo.

Getting started with CacheCow.Server on ASP.NET Core MVC

Documentation in

github is pretty clear, I believe, but for the sake of completeness I am bringing some of it here too. This covers the basic case with default implementations.

Essentially, all you need is a filter to decorate your actions, specifying the cache expiry duration in seconds. There are a bunch of other knobs but at this point, let's focus on the default scenario.

1. Add the package from nuget

In your package-manager console type below:

PM> install-package CacheCow.Server.Core.Mvc

2. Add CacheCow's default dependencies

public virtual void ConfigureServices(IServiceCollection services)

{

... // usual startup code

services.AddHttpCachingMvc(); // add HTTP Caching for Core MVC

}

3. Decorate the action with the HttpCacheFactory filter

public class MyController : Controller

{

[HttpGet]

[HttpCacheFactory(300)]

public IActionResult Get(int id)

{

... // implementation

}

}

Here we are defining the expiry to be 300 seconds (= 5 minutes). This means the client will cache the result for 5 minutes and after 5 minutes will keep asking if the resource has changed using conditional GET requests (see Part 2 for more info).

4. Check all is working

That should be all you need to have up and running. Now make a call to your API and you should see the

Cache-Control header. You can use postman, fiddler or any other tool... you will basically see something like this:

Vary: Accept

ETag: "SPQT7RzH1QgBAAEAAAA="

Cache-Control: must-revalidate, max-age=300, private

x-cachecow-server: validation-applied=True;⏎

validation-matched=False;short-circuited=False;query-made=True

Date: Thu, 31 May 2018 17:35:42 GMT

As you can see, CacheCow has added

Vary,

ETag and

Cache-Control. There is also a diagnostic header,

x-cachecow-server, that explains what CacheCow has performed to generate the response.

Now you can test if the conditional case by sending a GET request with the header below:

If-None-Match: "SPQT7RzH1QgBAAEAAAA="

And the server will respond with 304 if your resource has not changed.

More complex scenarios

Before we go into more details, it might be useful to go to CacheCow's github repo and review the ASP.NET

Core MVC sample. Build and run it, play around and browse the code. This will make the discussions below closer to home as it details how to cater for various scenarios.

Table 1 (further above) is your guide in deciding which interface to implement.

Implementing ITimedETagExtractor or ICacheResource

As mentioned above serialisation is a heavy-handed approach to generating TimedETag. While OK for low-to-mid level load, for high performance you would be best either implement

ICacheResource on your view models (what you return back from your action) or if you do not want dependency to a caching library for your view models, implement

ITimedETagExtractor to extract TimedETag from your view models.

If you implement

ICacheResource, you do not have to register anything additionally but if you implement

ITimedETagExtractor for your view models, you have to register them.

There are examples on the

samples.

Implementing ITimedETagQueryProvider

By implementing

ITimedETagQueryProvider, you protect your backend system so that cache validation can be achieved without bring the view model all the way to the API layer to extract/generate TimedETag.

There are examples on the

samples.

Dependency Injection and differentiation of ViewModels

Implementing ITimedETagQueryProvider or ITimedETagExtractor for different view models most likely involve different code. Since normally only a single implementing is registered against an interface, such implementation should check the type and then apply the appropriate code which breaks several programming principles.

You can use generic interfaces ITimedETagQueryProvider<TViewModel> and ITimedETagExtractor<TViewModel> to implement and then register. Then, in your filter, annotate the type of the view model. For example:

[HttpGet]

[HttpCacheFactory(0, ViewModelType = typeof(Car))]

public IActionResult Get(int id)

{

var car = _repository.GetCar(id);

return car == null

? (IActionResult)new NotFoundResult()

: new ObjectResult(car);

}

This means that you have implemented ITimedETagExtractor<Car> and ITimedETagQueryProvider<Car> and registered them in your IoC.

You would be registering these in your application using extension methods in CacheCow (depending which interfaces you have implemented):

public virtual void ConfigureServices(IServiceCollection services)

{

... // register stuff

services.AddQueryProviderForViewModelMvc<TestViewModel, TestViewModelQueryProvider>();

services.AddQueryProviderForViewModelMvc<IEnumerable<TestViewModel>, TestViewModelCollectionQueryProvider>();

}

Other options for register implementations are:

AddExtractorForViewModelMvc,

AddSeparateDirectiveAndQueryProviderForViewModelMvc or

AddDirectiveProviderForViewModelMvc. Some of these extension methods are essentially helpers that combine registration of multiple types.

Conclusions

CacheCow.Server is now relying on the data and data providers to take part in TimedETag generation and cache validation instead of storing and maintaining TimedETag and making guesses about the cache validation. This reduces the need for storage and making CacheCow a reliable solution capable of providing caching with air-tight consistency.

ASP.NET Core's HTTP Caching features are a good start but they lack some fundamental features thus I advise you to use CacheCow.Server instead - although I cannot guarantee that my views as the creator of CacheCow could be free of bias - just try and see for yourself and pick what works for you.