Introduction

[Level C3] This post is a follow-up to the question/discussion-point Darrel Miller has started here. The question is that in the REST world, what is coupling and how we can achieve de-coupling. Although this post can be read independently, it is best to start with reading his post first. [This discussion carries on in the next related post]

Motivation

REST has a strong focus on decoupling client and server. With REST awareness and adoption increasing, the challenge to define new best practices has now become more apparent. This is particularly important in the light of how much nowadays can be achieved in the browser - a previously limited client. Rise of the Single Page Applications (SPAs) is a testament to the popularity of creating a rich-client in what was previously called thin-client. Diversity of available clients and their capabilities have - in a way - forced us towards REST and achieving de-coupling of client and server.

But recently, some have felt that too much power and control has shifted towards the client and the server has been reduced to mere a data provider. One of such groups is ROCA that defines a set of best practices that are in clear opposition to SPA paradigms. I actually meant to have a post on ROCA (which I hopefully will soon) but I suppose this post can be a primer as the issue at question is relevant.

Background

Darrel defines coupling as "a measure of how changes in one thing might cause changes in the other". I would very much go with this definition but I would like to expand upon.

Coupling is a software design (logical) and architecture (physical) anti-pattern. Loose-coupling is on the other hand a virtue that allows different compartments (intentionally avoiding using bloated words module or component) of the system to change independently.

In the world of code (logical), we use Single-Responsibility principle (S from SOLID) to decouple pieces of code: a class needs to have a single reason to change. Arguably, rest of the SOLID principles deal with various degrees of decoupling. For example D is for not baking the dependency.

On the other hand, in SOA (physical) we loosely-couple the services. Services can be dependent on other services but they would be able to maintain a reasonable level of functionality if other services go down. The key tasks in building a successful SOA is defining service boundaries. Another important concept is achieving cohesion: keeping all related components of the service in the same service and resisting to break a service into two compartments when boundary is weak.

Defining boundary and achieving cohesion

Coupling in other words is baking knowledge of something in a compartment where it is concern of another compartment.

When I buy stuff from Amazon and it is delivered when I am not at home, they leave a card with which I can claim my package at the depot. The card has just an Id in the form of barcode. It does not have the row and shelf number my package is kept at the depot. If it had, it might have made it easier for the clerk to use the numbers on the card to fetch the package. But this way I am baking the knowledge of location into something it does not need to know and what if they had to change the location of the package? That is why the clerk zaps the barcode and location is shown in the system. They can happily change the location as long as the Id does not change.

On the other hand, the depot does not need to know what is in the package - it is not its concern. If it did, it might have been helpful in rare scenarios but that is not worth considering.

So the key to defining boundary and achieving cohesion is to understand whose concern it is for an abstraction. We will represent 3 models here: server-concern, client-concern and mixed-concern. In brief, it depends.

Server-Concern

In this case, only server needs to know about an abstraction. The problem happens when the concept oozes out to the client when client gets to know server implementation details (exposing server bowels).

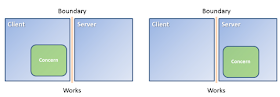

|

| Server-Concern |

Example

A typical example is getting the list of most recent contacts:

GET /api/contacts?lastUsedMoreThan=2012-05-06&count=20

GET /api/contacts/mostRecent

In the first case, client gets to know that server keeps a value of lastUsed for contacts. This prevents server to optimise the algorithm by also including number of times we have used the contact. However, in the second case server hides its implementation so the change can be implemented without breaking the client.

Client-Concern

In this scenario, the abstraction is purely a client concept. The problem happens when server starts to make decisions for the client.

|

| Client-Concern |

Example

A typical example is pagination:

GET /api/contacts/page/11

GET /api/contacts?skip=200&count=20

Number of pages available and number of each record in a page is a client concern. This kind of detail will be different on the iPhone from the desktop from the tablet (and what tablet). In the first case, server will make a decision on the number of records per page (and even understands the page as a resource) while in the second case, knowledge of pagination is confined to the client since it is its concern.

Mixed-Concern

In this scenario, both server and client need to work in accord for the feature to work. The problem happens when server or client assumes that the other definitely implements the abstraction.

|

| Mixed-Concern |

Example

A typical example is HTTP caching. For HTTP caching to work, client and server need to work in tandem. Server needs to return with each resource, a Cache-Control header, an ETag or a LastModified and the client needs to use these parameters in its future conditional requests with If-Modified-Since or If-None-Match.

|

| No assumption in implementation on the other side helps both sides to carry on working without the feature |

However, if server does not provide caching or the client does not use and respect the caching parameters from the server, system does not break - albeit it can result in an inferior or suboptimal experience.

Diversity and compromise

While we can have 3 above models, there are cases where same feature can be implemented differently.

Let's bring an example from engineering. Where do you put AC/DC power transformer? My PC has the transformer in its power supply (item 6) which is equivalent of Client-Concern. My laptop uses a power adaptor and has no mains supply which is equivalent of Server-Concern. On the other hand, my electrical toothbrush has divided the transformer into both charger element and also inside the toothbrush so it works by magnetic induction (analogous to mixed-concern). This is clearly a compromise but the thinking behind it is make the toothbrush waterproof and safe.

Conclusion

Darrel's definition is excellent but we have expanded upon it since the change (maintenance) is one facet of the same concept. Others are concern (requirement) and knowledge of that concern in a compartment (implementation).

We discussed three models: server-concern, client-concern and mixed-concern. Each of these are valid patterns but they each come with its own anti-pattern to be aware of. So in short: it depends.

We discussed three models: server-concern, client-concern and mixed-concern. Each of these are valid patterns but they each come with its own anti-pattern to be aware of. So in short: it depends.

This is a great expansion of my original thoughts. I especially like your pagination example of a client concern. Definitely food for thought.

ReplyDeleteThanks. This is a hot topic.

DeleteIn addition to pagination, we have other examples such as conditional loading of application logic, e.g. JavaScript resources. I will try to think of a few more for my post on ROCA.

Actually, caching isn't mixed concern by default; it was explicitly defined to default to cacheability (heuristic caching), while giving the server control if it wants to explicitly take it.

ReplyDeleteYour observation is accurate as http://www.w3.org/Protocols/rfc2616/rfc2616-sec13.html#sec13.4 clearly says everything is cacheable unless specified otherwise using cache-control headers.

DeleteThis would probably work fine in the internet back in the early 2000s. Yet, in a real-world application very few resources can be left on their own to be cached indefinitely by the client - this includes resources such as CSS and js files as they change often. So most web platforms be default include the no-cache directives, as such there is a burden for the server to take control of that. So I am on the lines that perhaps RFC2616 would have been better off by no-caching as default.

For me (I had to implement full client-server stack of the HTTP caching for my open source project), server is concerned with controlling the caching and its metadata (etag, last-modified) and its validation and client is concerned with full comprehension of the directives and storage and validation. So I would say it is mixed-concern.

By the way, any thoughts on CSDS I talked about here: http://byterot.blogspot.co.uk/2012/11/client-server-domain-separation-csds-rest.html

DeleteWould be great to have your views on it.