- Part 1 - CacheCow 2.0 is here - supporting .NET Standard and ASP.NET Core MVC

- Part 2 - CacheCow.Client 2.0 [This post]

- Part 3 - CacheCow.Server 2.0: Using it on ASP.NET Core MVC

- Part 4 - CacheCow.Server for ASP.NET Web API [Coming Soon]

- Epilogue: side-learnings from supporting Core [Coming]

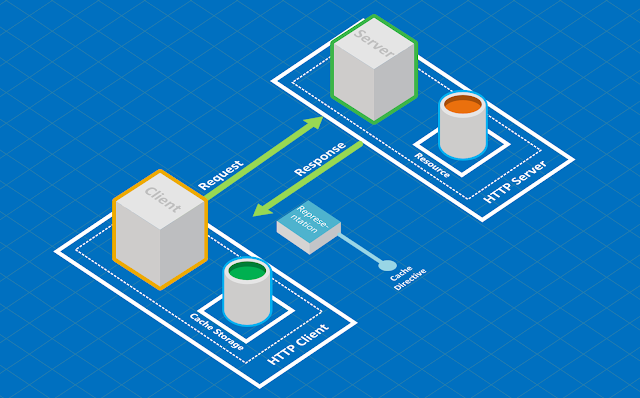

State of Client HTTP Caching in .NET

Before CacheCow, the only way to use HTTP caching was to use Windows/IE caching through WebRequestHandler as Darrel explains here. AFAIK, this class no longer exists in .NET Standard due to its tight coupling with Windows/IE implementations.

I set out to build a store-independent caching story in .NET around 6 years ago and named it CacheCow and after these years I am still committed to maintain that effort.

Apart from a full-blown HTTP Caching, I had other ambitions in the beginning, for example, I had plans so you could limit caching per domain, etc. It became evident that this story is neither a critical feature nor possible in all storage techs. The underlying data structure requirement for cache storage is key-value while this feature required more complex querying. I accomplished implementing it for some storages but never was really used. That is why I no longer pursue this feature and it has been removed from CacheCow.Client 2.0. It is evident that unlike browsers, virtually all HttpClient instances would communicate with a handful of APIs and storage in this day and age is hardly a problem.

Apart from a full-blown HTTP Caching, I had other ambitions in the beginning, for example, I had plans so you could limit caching per domain, etc. It became evident that this story is neither a critical feature nor possible in all storage techs. The underlying data structure requirement for cache storage is key-value while this feature required more complex querying. I accomplished implementing it for some storages but never was really used. That is why I no longer pursue this feature and it has been removed from CacheCow.Client 2.0. It is evident that unlike browsers, virtually all HttpClient instances would communicate with a handful of APIs and storage in this day and age is hardly a problem.

CacheCow.Client Features

The features of CacheCow 2.0 is pretty much unchanged since 1.x other than that now it supports .NET Standard 2.0+ hence you can use it in .NET Core and on platforms other than Windows (Linux/Mac).

In brief:

- Supporting .NET 4.52+ and .NET Standard 2.0+

- Store cacheable responses

- Supports In-Memory and Redis storages - SQL is coming too (and easy to build your own)

- Manage separate query/storage of representations according to server's Vary header

- Validating GET calls to validate cache after expiry

- Conditional PUT calls to modify a resource only if not changed since (can be turned off)

- Exposing diagnostic x-cachecow-client header to inform of the caching result

Using CacheCow.Client is effortless and there are hardly any knobs to adjust - it hides away all the caching cruft that can get in your way of consuming an API efficiently.

CacheCow.Client has been created as a DelegatingHandler that needs to be added to the HttpClient's HTTP pipeline to intercept the calls. We will look at some use typical use cases.

CacheCow.Client has been created as a DelegatingHandler that needs to be added to the HttpClient's HTTP pipeline to intercept the calls. We will look at some use typical use cases.

Basic Use Case

Let's imagine you have a service that needs to consume a cacheable resource and you are using HttpClient. Here are the steps to follow:

Add a Nuget dependency to CacheCow.Client

Use command-line or UI to add a dependency to CacheCow.Client version 2.x:

You can pass the cache store (an implementation of ICacheStore) in an overload of this method but here we are going to use the default In-Memory store suitable for our use case.

In high-throughput scenarios you would want to use a distributed cache such as Redis. CacheCow.Client 1.x used to support Azure Fabric Cache (discontinued by Microsoft), two versions of Memcached, SQL Server, ElasticSearch, MongoDB and even File. Starting with 2.x, new storages will be added only when they absolutely make sense. There is a plan to migrate SQL Server storage but as for the others, there is currently no such plans. Having said that, it is very easy to implement your own and we will look into this further down in this post (I have chosen LMDB, a super fast file-based storage by Howard Chu).

For this case, we would like to use Redis storage. In case you do not have access to an instance of Redis, you can download (Mac/Linux or Windows) and run Redis locally without installation.

CacheCow.Client.RedisCacheStore library uses StackExchange.Redis, the de-facto Redis client library in .NET, hence it can accepts connection string according to StackExchange.Redis conventions as well as IDatabase, etc to initialise the store.

Another aspect of cache validation is on PUT calls so that the resource gets modified only if it has not changed since you have received it. This is essentially optimistic concurrency which is beautifully implemented in HTTP using validators. CacheCow.Client does this by default but there is a property on CachingHandler if you need to turn it off. In case you would wish to do so (or to change any other aspect of the CachingHandler), create the client without the ClientExtension:

> install-package CacheCow.Client

Create an HttpClient

CacheCow.Client provides a helper method to create an HttpClient with caching enabled:var client = ClientExtensions.CreateClient();All this does is to create an HttpClient with CacheCow's CachingHandler added to the pipeline fronted by the HttpClientHandler.

You can pass the cache store (an implementation of ICacheStore) in an overload of this method but here we are going to use the default In-Memory store suitable for our use case.

Make two calls to the cacheable resource

Now we make a GET call to get a cacheable resource and then another call to get it again. From examining the CacheCow header we can ascertain second response came directly from the cache and never even hit the network.const string CacheableResource = "https://code.jquery.com/jquery-3.3.1.slim.min.js";

var response = client.GetAsync(CacheableResource).

ConfigureAwait(false).GetAwaiter().GetResult();

var responseFromCache = client.GetAsync(CacheableResource).

ConfigureAwait(false).GetAwaiter().GetResult();

Console.WriteLine(response.Headers.GetCacheCowHeader().ToString());

// outputs "2.0.0.0;did-not-exist=true"

Console.WriteLine(responseFromCache.Headers.GetCacheCowHeader().ToString());

// outputs "2.0.0.0;did-not-exist=false;retrieved-from-cache=true"

Using alternative storages - Redis

If you have 10 boxes calling an API and they are using an In-Memory store, the response would have to be cached separately on each box and the origin server will be hit potentially 10 times. Also due to dispersion, usefulness of the cache is reduced and you will see lower cache hit ratio.In high-throughput scenarios you would want to use a distributed cache such as Redis. CacheCow.Client 1.x used to support Azure Fabric Cache (discontinued by Microsoft), two versions of Memcached, SQL Server, ElasticSearch, MongoDB and even File. Starting with 2.x, new storages will be added only when they absolutely make sense. There is a plan to migrate SQL Server storage but as for the others, there is currently no such plans. Having said that, it is very easy to implement your own and we will look into this further down in this post (I have chosen LMDB, a super fast file-based storage by Howard Chu).

For this case, we would like to use Redis storage. In case you do not have access to an instance of Redis, you can download (Mac/Linux or Windows) and run Redis locally without installation.

Add a dependency to Redis store package

After running your Redis (or perhaps creating one in the cloud), add a dependency to CacheCow.Client.RedisCacheStore:> install-package CacheCow.Client.RedisCacheStore

Create an HttpClient with a Redis store

We use the ClientExtensions to create a client with a Redis store - here it connects to a local cache:var client = ClientExtensions.CreateClient(new RedisStore("localhost"));

CacheCow.Client.RedisCacheStore library uses StackExchange.Redis, the de-facto Redis client library in .NET, hence it can accepts connection string according to StackExchange.Redis conventions as well as IDatabase, etc to initialise the store.

Make two calls to a cacheable resource

Rest of the code is the same as with In-Memory scenario, making two HTTP calls to the same cacheable resource and observing the CacheCow headers - see above.Cache Validation

Cacheable resources provide a validator so that the client can validate whether the version they have is still current. This was explained in the previous post, but essentially representation's ETag (or Last-Modified) header gets used to validate the cached resource with the server. CacheCow.Client already does this for you so you do not have to worry about it.Another aspect of cache validation is on PUT calls so that the resource gets modified only if it has not changed since you have received it. This is essentially optimistic concurrency which is beautifully implemented in HTTP using validators. CacheCow.Client does this by default but there is a property on CachingHandler if you need to turn it off. In case you would wish to do so (or to change any other aspect of the CachingHandler), create the client without the ClientExtension:

var handler = new CachingHandler()

{

InnerHandler = new HttpClientHandler(),

UseConditionalPut = false

};

var c = new HttpClient(handler);

There are bunch of other knobs that are provided for some edge cases so you could modify the default behaviour but they are pretty self-explanatory and not worth going into much details. Just browse public properties of CachingHandler and GitHub or StackOverflow is the best place to discuss if you have a question.Supporting other storages - implementing ICacheStore for LMDB

CacheCow separates the storage from the HTTP caching functionality hence it is possible to plug-in your own storage with a few lines of code.ICacheStore is a simple interface with 4 async methods:

public interface ICacheStore : IDisposable

{

Task<HttpResponseMessage> GetValueAsync(CacheKey key);

Task AddOrUpdateAsync(CacheKey key, HttpResponseMessage response);

Task<bool> TryRemoveAsync(CacheKey key);

Task ClearAsync();

}

LMDB is a lightning-fast database (as the name implies) that has a support in .NET, thanks to Cory Kaylor for his OSS project Lightning.NET. The project needs some more love and care fixing some of the build issues and updating to the latest frameworks but it is a great work.This scenario is useful especially if you need a local persistent store.

The implementation is pretty straightforward and we use Put, Get, Delete and Truncate methods of LightningTransaction to implement UpdateAsync, GetValueAsync, TryRemoveAsync and ClearAsync functionality. For Dispose, we just need to dispose the lightning environment.

Here is a pretty typical implementation:

using System;

using System.IO;

using System.Net.Http;

using System.Text;

using System.Threading.Tasks;

using CacheCow.Client;

using CacheCow.Client.Headers;

using CacheCow.Common;

using LightningDB;

namespace CacheCow.Client.Lightning

{

public class LightningStore : ICacheStore

{

private readonly LightningEnvironment _environment;

private readonly string _databaseName;

private readonly MessageContentHttpMessageSerializer _serializer = new MessageContentHttpMessageSerializer();

public LightningStore(string path, string databaseName = "CacheCowClient")

{

_environment = new LightningEnvironment(path);

_environment.MaxDatabases = 1;

_environment.Open();

_databaseName = databaseName;

}

public async Task AddOrUpdateAsync(CacheKey key, HttpResponseMessage response)

{

var ms = new MemoryStream();

await _serializer.SerializeAsync(response, ms);

using (var tx = _environment.BeginTransaction())

using (var db = tx.OpenDatabase(_databaseName,

new DatabaseConfiguration { Flags = DatabaseOpenFlags.Create }))

{

tx.Put(db, key.Hash, ms.ToArray());

tx.Commit();

}

}

public Task ClearAsync()

{

using (var tx = _environment.BeginTransaction())

using (var db = tx.OpenDatabase(_databaseName,

new DatabaseConfiguration { Flags = DatabaseOpenFlags.Create }))

{

tx.TruncateDatabase(db);

tx.Commit();

}

return Task.CompletedTask;

}

public void Dispose()

{

_environment.Dispose();

}

public async Task<HttpResponseMessage> GetValueAsync(CacheKey key)

{

using (var tx = _environment.BeginTransaction())

using (var db = tx.OpenDatabase(_databaseName,

new DatabaseConfiguration { Flags = DatabaseOpenFlags.Create }))

{

var data = tx.Get(db, key.Hash);

if (data == null || data.Length == 0)

return null;

var ms = new MemoryStream(data);

return await _serializer.DeserializeToResponseAsync(ms);

}

}

public Task<bool> TryRemoveAsync(CacheKey key)

{

using (var tx = _environment.BeginTransaction())

using (var db = tx.OpenDatabase(_databaseName,

new DatabaseConfiguration { Flags = DatabaseOpenFlags.Create }))

{

tx.Delete(db, key.Hash);

tx.Commit();

}

return Task.FromResult(true);

}

}

}

Conclusion

CacheCow.Client is a simple and straightforward to get started with. It supports In-Memory and Redis storages and storage of your choice can be plugged-in with a handful lines of code - here we demonstrated that for LMDB. It is capable of carrying out GET and PUT validation, making your client more efficient and your data more consistent.In the next post, we will look into Server scenarios in ASP.NET Core MVC.