Health Endpoint is a common practice in building APIs. Such an endpoint, unlike other resources of a REST API, instead of achieving a business activity, returns the status of the service and while it can gather and return some data, it is the HTTP status that defines whether the service is "Up or Down". These endpoints commonly go and check a bunch configurations and connectivity with the dependent services, and even make a few calls for a "Test Customer" to make sure business activity can be achieved.

There is something above that just doesn't feel right to me - and this post is an exercise to define what I mean by it. I will explain what are the problems with the Health API and I am going to suggest how to "fix" it.

What is the health of an API anyway? The server up and running and capable of returning the status 200? Server and all its dependencies running and returning 200? Server and all its dependencies running capable of returning 200 in a reasonable amount of time? API able to accomplish some business activity? Or API able to accomplish a certain activity for a test user? API able to accomplish all activities within reasonable time? API able to accomplish all activities with its 95% percentile falling within an agreed SLA?

A Service is a complex beast. While its complexity would be nowhere near a living organism, it is useful to draw a parallel with a living organism. I remember from my previous medical life that the definition of health - provided by none other than WHO - would go like this:

"Health is a state of complete physical, mental and social well-being and not merely the absence of disease or infirmity."In other words, defining health of an organism is a complex and involved process requiring deep understanding of the organism and how it functions. [Well, we are lucky that we are only dealing with distributed systems and their services (or MicroServices if you like) and not living organisms.] For servies, instead of health, we define the Quality of Service as a quantitative measure of a service's health.

Quality Of Servie is normally a bunch of orthogonal SLAs each defining a measurement for one aspect of the service. In terms of monitoring, Availability of a service is the most important aspect of the service to guage and closely follow. Availability of the service cannot simply be measured by the amount of time the servers dedicated to a service have been up. Apart from being reachable, service needs to respond within acceptable time (Low Latency) and has to be able to achieve its business activity (Functional) - no point server being reachable and return 503 error within milliseconds. So the number of error responses (as a deviation from the baseline which can be normal validation and business rule errors) also come into play.

So the question is how can we, expose an endpoint inside a service that can aggregate all above facets and report the health of a service. Simple answer is we cannot and should not commit ourselves to do it. Why? Let's take some simple help from algebra.

|

| API/Service maps an input domain to an output domain (codomain). Also availability is a function of the output domain. |

f) is basically a function that maps the input domain (I) to an output domain (O). So:O = f(I)

A) is a function (a) of the output domain since it has to aggregate errors, latencies, etc:A = a(O)

A = a(f(I))

A cannot be measured without I - which for a real service is a very large set. And also it needs all of f - not your subset bypass-authentication-use-test-customer method.So one approach is to sit outside the service and only deal with the output domain in a sort of proxy or monitoring server logs. Netflix have done a ton of work on this and have open sourced it as Hysterix) and no wonder I have not heard anything about the magical Health Endpoint in there (now there is an alternative endpoint which I will explain later). But if you want to do it within the service you need all the input domain and not just your "Test Customer" to make assertions about the health of your service. And this kind of assertion is not just wrong, it is dangerous as I am going to explain.

First of all, gradually - especially as far as the ops are concerned - that green line on the dashboard that checks your endpoint becomes your availability. People get used to trust it and when things go wrong out there and customers jump and shout, you will not believe it for quite a while because your eye sees that green line and trusts it.

And guess what happens when you have such an incident? There will be a post-mortem meeting and all tie-and-suits will be there and they identify the root cause as the faulty health-check and you will be asked to go back and fix your Health Check endpoint. And then you start building more and more complexity into your endpoint. Your endpoint gets to know about each and every dependency, all their intricacies. And before your know it, you could build a complete application beside your main service. And you know what, you have to do it for each and every service, as they are all different.

So don't do it. Don't commit yourself to what you cannot achieve.

So is there no point in having a simplistic endpoint which tells us basic information about the status of the service? Of course there is. Such information are useful and many load balancers or web proxies require such an endpoint.

But first we need to make absolutely clear what the responsibility of such an endpoint is.

Canary Endpoint

A canary endpoint (the name is courtesy of Jamie Beaumont) is a simplistic endpoint which gathers connectivity status and latency of all dependencies of a service. It absolutely does not trigger any business activity, there is no "Test Customer" of any kind and is not a "Health Endpoint". If it is green, it does not mean your service is available. But if it is red (your canary is dead) then you definitely have a problem.So how does a canary endpoint work? It basically checks connectivity with its immediate dependencies - including but not limited to:

- External services

- SQL Databases

- NoSQL Stores

- External distributed caches

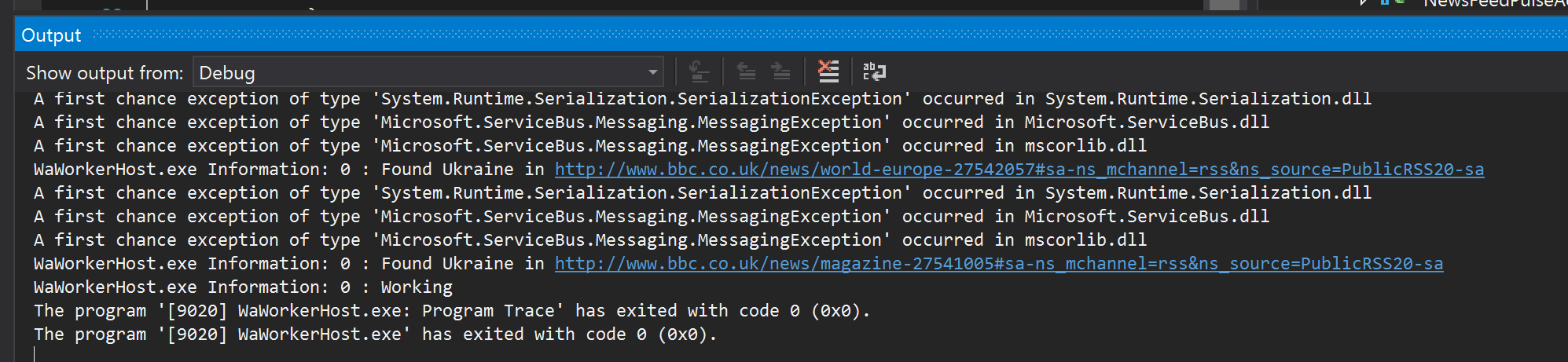

- Service brokers (Azure RabbitMQ, Service Bus)

You probably noticed that External Services appear in Italic i.e. it is a bit different. Reason is if an external service has a canary endpoint itself, instead of just a connectivity check, we call its canary endpoint and add its aggregated result to the result we are returning. So usually the entry point API will generate a cascade of canary chirps that will tell us how things are.

Implementation of the connectivity check is generally dependent on the underlying technology. For a Cache service, it suffices to Set a constant value and see it succeeding. For a SQL Database a SELECT 1; query is all that is needed. For an Azure Storage account, it would be enough to connect and get the list of tables. The point being here is that none of these are anywhere near a business activity, so that you could not - in the remotest sense - think that its success means your business is up and running.

So there you have it. Don't do health endpoints, do canary instead.

Canary Endpoint implementation

A canary endpoint normally gets implemented as an HTTP GET call which returns a collection of connectivity check metrics. You can abstract the logic of checking various dependencies in a library and allow API developers to implement the endpoint by just declaring the dependencies.We are currently working on an implementation in ASOS (C# and ASP.NET Web API) and there is possibility of open sourcing it.

Security of the Canary Endpoint

I am in favour of securing Canary Endpoint with a constant API key - normally under SSL. This does not provide highest level of security but it is enough to make it much more difficult to break into. At the end of the day, a canay endpoint lists all internal dependencies, components and potentially technologies of a system that can be used by hackers to target components.

Performance impact of Canary Endpoint

Since canary endpoint does not trigger any business activity, its performance footprint should be minimal. However, since calling the canary endpoint generates a cascade of calls, it might not be wise to iterate through all canary endpoints and just call them every few seconds since deeper canary endpoints in a highly layered architecture get called multiple times in each round.